Smashing Podcast Episode 41 With Eva PenzeyMoog: Designing For Safety

In this episode, we’re talking about designing for safety. What does it mean to consider vulnerable users in our designs? Drew McLellan talks to expert Eva PenzeyMoog to find out.

Show Notes

- Design for Safety from A Book Apart

- The Inclusive Safety Project

- Eva on Twitter

- Eva’s personal site

Weekly Update

- How To Build An E-Commerce Site With Angular 11, Commerce Layer And Paypal written by Zara Cooper

- Refactoring CSS: Strategy, Regression Testing And Maintenance written by Adrian Bece

- How To Build Resilient JavaScript UIs written by Callum Hart

- React Children And Iteration Methods written by Arihant Verma

- Frustrating Design Patterns: Disabled Buttons written by Vitaly Friedman

Transcript

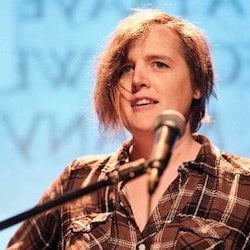

Drew McLellan: She’s the founder of The Inclusive Safety Project, an author of the book, Designed For Safety, which launches this month from A Book Apart. She is the Principal Designer at 8th Light, where she designs and builds custom software and consults on safe and inclusive design strategy. We know she’s an expert on designing technology to protect the vulnerable, but did you know she’s the international record holder for the most doughnuts performed in a forklift truck? My smashing friends, please welcome, Eva PenzeyMoog. Hi, Eva, how are you?

Drew McLellan: She’s the founder of The Inclusive Safety Project, an author of the book, Designed For Safety, which launches this month from A Book Apart. She is the Principal Designer at 8th Light, where she designs and builds custom software and consults on safe and inclusive design strategy. We know she’s an expert on designing technology to protect the vulnerable, but did you know she’s the international record holder for the most doughnuts performed in a forklift truck? My smashing friends, please welcome, Eva PenzeyMoog. Hi, Eva, how are you?

Eva PenzeyMoog: I’m smashing.

Drew: It’s good to hear. I wanted to talk to you today about the principles of designing products and experiences with the safety of vulnerable users in mind. Would it be fair right from the outset to give some sort of trigger warning for any particular subjects that we might touch on?

Eva: Absolutely, yes. Thank you for bringing that up. Definitely trigger warning for explicit mentions of domestic violence, also possibly some elder abuse and child abuse.

Drew: That’s important. Feel free. If you worry any of those issues, it might be triggers for you. Feel free to skip them. We’ll see you on the next episode. Frame the conversation for us, Eva. When we’re talking about Design For Safety, what sort of safety issues are we talking about? We’re not talking about interfaces for self driving cars. It’s not that sort of safety, is it?

Eva: Right, exactly. Yeah. When I’m talking about safety, I’m really talking about interpersonal safety, the ways that users can weaponize our products to harm each other in an interpersonal way. People who know each other, live together, lots of domestic violence from romantic partners, but also parents and children. There’s also a bit of employers and employees more in the realm of surveillance. But there’s that inner personal actual relationship required in the terms of safety that I’m talking about, as opposed to, yeah, someone anonymous on the internet or some anonymous entity trying to get your data, things like that.

Drew: Could it be issues as simple as … I think of everyday you see on social networks where there’s the ability for different users to direct message each other, and how that’s supposed to be a helpful little tool to enable people to take a conversation offline or out of public. But that sort of thing could also, without the right safeguards, be a vector for some sort of abuse of control.

Eva: Yeah, absolutely. Definitely anytime you’re allowing users to send any type of text to each other, there’s the possibility for abuse. The Facebook messaging, that one’s a little more obvious, and I think … Well, I would hope that they do have some safeguards in place that they recognize that maybe you don’t want to see certain messages or want to let someone contact you. But one that’s really interesting and related that I came across while doing research is a lot of different banking applications or services like Venmo that let you share money. There’s often a space for a message. At least with Venmo, it’s required.

Eva: Some banks, it’s optional, but people will send one penny to someone and then have some abusive message or something really harmful or scary or threatening, and there’s not really a way for the user receiving those messages to flag that or to say, “I want to block this user, because why would you want to stop someone sending money from you.” That’s a situation where I think the designer simply haven’t considered that abusers are always looking for ways to do things like that. They’re very creative, and it hasn’t been considered in the design.

Drew: We often talk about designing the happy path where everything is used as it’s designed to be used and the experience goes smoothly. Then as engineers, we think about, well, how things might go wrong in terms of validation failing or APIs being down. But I’m not sure … As an industry, do we have a big blind spot about ways the technologies could be misused when it comes to considering the safety of our users?

Eva: Yeah. I absolutely think there’s a giant blind spot. People are very familiar with these sort of various threat models, like I mentioned, of the anonymous person harassing you on Twitter, different entities trying to hack into a banking company’s data, things like that. But we call it the domestic violence threat model, which is super different and it’s one that most people aren’t thinking about and that’s always been the feedback when I did my talk, designing against domestic violence in the before times before the pandemic stopped conferences. That was always the feedback, is people saying, “I had never heard of this. I had no idea.” That’s the goal with my speaking and my book and my work in general is to help people understand what this is and what to do about it because it is something that’s just an enormous blind spot.

Drew: I think we do have a tendency, and obviously it’s dangerous to presume that every user is just like us. Just like the people who are building the service or product, just like our soldiers, like our friends and our family and the people that we know, and to presume that everyone is in a stable home situation and has full ownership or control of their services and devices. That’s not always the case, is it?

Eva: Yeah, absolutely. Definitely not always the case. I think we might look at our friends and family and think that everyone is in a good relationship, but something that I’ve found is that definitely most people who go through domestic violence aren’t exactly telling everyone in their life and shouting it from the rooftops. Most people, just based on the statistics, it’s so common. You probably do know someone who’s been in that situation or is currently in that situation, and they just aren’t really talking about it or they’re not maybe sharing the full extent of the behavior.

Eva: In a lot of ways, it’s understandable that it’s not something people have really thought about in the workplace because it’s not something we think about in society and life in general and we reproduce that in our workplace. My work is trying to get us to talk about it a little more explicitly.

Drew: What are some of the things we should be thinking about when it comes to these considerations? Just thinking about when somebody else might have access to your account, or if a partner knows your password and can get in, you would think that that products have been designed to be controlled by one person, but now maybe somebody nefarious is accessing it. What sort of considerations are there there?

Eva: Yeah. Well, there are so many different things, but that is a really big one that I have … Three main chapters in my new book are focused on the three big different areas where this happens, and what you just mentioned is the focus of one of them about control and power issues with products that are designed for multiple people. Things like a shared banking account, things like Netflix or Spotify, things like all the sort of different home devices, Internet of Things devices, that are ostensibly meant for multiple people. But there’s the assumption that everyone is a respectful person who’s not looking to find another way to enact power and control over the people around them.

Eva: A lot of joint bank accounts or things like shared credit card service masquerade as a joint account, but really one person has more power. For example, this happened to me and it was really frustrating because I handle most of the finances in my marriage. But when we set up our first joint bank account years ago, they set my husband as the primary user, which basically meant that it was his publicly available data that got used to create a security quiz. When I log into our bank from a new Wi-Fi network, I have to ask him like which of these streets did you live on when you were a kid? They’re actually mostly … Some of them I can answer.

Eva: I know he’s never lived in California, but a lot of them are actually really good, and I have to ask him even though we’ve been together for a long time. They’re pretty effective at keeping someone out. But it’s like this is supposed to be a joint account, why is it actually … It’s actually just an account for him that I also have access to. A lot of issues with that where they’re allowing someone to have more control because he could just not give me the answers and then I wouldn’t have access to our finances without having to call the bank or go through something and go through a different process. Yeah. Lots of different issues with control.

Eva: I think whenever you’re designing a product that is going to involve multiple users thinking through how is one user going to use this to control another person, and then how can we put in some safeguards to that, either making it so that one person doesn’t have control. If that’s not possible, how can we at least make sure that the other person understands exactly what’s happening and knows exactly how to regain power? Can we give them a number to call, some sort of setting to change? Whatever it is, it all gets kind of complicated.

Eva: I do have a whole process in the book about what this actually looks like in practice, something a little more specific than just consider domestic violence or just consider who’s in control. I don’t find that type of advice super useful. I do have a very thorough process that designers can put in on top of their actual existing design process to get at some of this stuff.

Drew: I guess, where you have these account … Having an account is such a commonplace concept. We’re building products or services that the fundamental building block is, okay, we’ve got a user account. We probably don’t even really think too closely about the sorts of issues when setting that up and thinking is the account different from the people who are responsible for the account? Often, they’re just considered to be one entity, and then you have to tack other entities on to it to create joint accounts and those sorts of things. But also considering the issue of what happens to that account if two people go in separate ways, how can that be split apart in the future? Is that something that we should be thinking about from the outset?

Eva: Yeah, absolutely. That’s a really good point you bring up. I think one of the things that I feel really strongly about is that when we center the survivors of different types of abuse in our design, we end up making products that are better for everyone. I did interview a fair amount of people about specifically the financial abuse element, which is really common in domestic violence settings. The statistic is 99% of people in a domestic violence relationship, there’s some element of financial abuse that’s really common. But I also ended up interviewing some people who had tragically lost their spouse, person had died, and they had a joint account.

Eva: That is like a pretty … It’s a very common, sadly, scenario, but it’s not something that lots of these products are designed to handle, and it can take years to actually get full control over a shared account or over something like … When my grandma died, she had a lot of foresight and she had given my dad access to everything. But even with that, it still took him a long time to actually get everything squared away because these products just aren’t designed to handle things like that. But if we were to center survivors and think about like, yeah, what does it look like when two people split up, and be able to handle that effectively, that would ultimately help a bunch of other people in other situations.

Drew: We think a lot of think about the onboarding process and creating new accounts and bringing people into a product, and then forget to consider what happens when they leave by whatever means, whether they unfortunately die or how does that get rounded off at the other end of the process. I think it’s something that doesn’t get the attention that it could really benefit from.

Eva: Yeah.

Drew: We carry phones around in our pockets, and they are very personal devices and they’re often literally the keys to our access to information and finances and communication. In a negative situation, that could easily … The fact that it’s such a personal device can become a vector for control and abuse. Just thinking about things like location services, services like Apple’s Find My, which is great if you’ve got school aged kids and you can check in and see where they are, see they’re where they’re supposed, they’re safe. It is a safety feature in a lot of ways, but that feature can be subverted, can’t it?

Eva: Yeah, absolutely. Yeah, and I’m glad you bring that up because so many of these products are safety features for kids. Yeah, of course, parents want to know where their kids are, they want to make sure that they’re safe, and that can be a really effective tool. I do think there are a lot of issues with parents overusing these products. I found some cases of college students who are still being checked in on by their parents and will get a call if they go to a party off campus like why aren’t you in your dorm room? Things like that. It can get to be too much. But yeah, for the most part, those are great products. But a lot of people do then misuse those to track adults who are not consenting to having their location tracked, and a lot of times they either …

Eva: You have to go into the service like with Google Maps, for example, location sharing. You have to go into it to see that you’re sharing it with someone. There’s no alert. Similar with Find My. The user whose location is being tracked does get an alert, but in a domestic violence situation, it’s really easy for the abuser to just delete the alert off the person’s phone before they can see it, and then there’s not really another way that that person is going to realize that this is even happening. I think that’s a good example of something that abuse cases are just not being considered when we’re creating things that are ultimately about safety for kids. But we have to realize that there are tons of people out there who are going to use it for not kids in these other settings.

Drew: I suppose in a relationship, you may give consent for your location to be tracked quite willingly at one point in time, and then you may not understand that that continues, and might not be aware that that’s still going on and you’re being tracked without realizing.

Eva: Yeah. That’s a really important thing to consider because within abusive relationships, it’s not like … The abuse doesn’t start on day one, for the most part. It’s usually a really great relationship at first, and then they slowly introduce different forms of control and taking power and removing the person from their support network, and this all happens over time, often over the years, because if you just started doing this on the first date, most people would be like, “Yeah, no, I’m out.” But once there’s this loving relationship, it becomes a lot harder to just leave that person.

Eva: But yeah, a lot of times things that were totally safe to do in the beginning of the relationship are no longer safe, but the person has long since forgotten that they shared their location with this person, and then again there’s not a good way to be reminded. There are some things like to their credit, Google sends an email every 30 days, although some people have said that they don’t actually receive them that frequently, and some people do. I’m not sure what exactly is going on, but they do send a summary email with these are all the people who you’re sharing your location with, which is really awesome.

Eva: But I do think a lot of damage can be done in 30 days. I would prefer something that’s more frequent or even an omnipresent thing that’s letting you know that this is happening, or something that’s happening more frequently, then would enable the abuser to just delete that notification. Yeah, that’s a really good point, is that consent. It’s a lot of things that come from sexual assaults consent practices. I think there’s so much relevance for tech. Just because you consented to something in the past doesn’t mean you consent to it now or in the future. But in tech, we are like, “Well, they consented five years ago, so that consent, it’s still valid,” and that’s really not the case. We should be getting their consent again later on.

Drew: Yes, it presents all sorts of challenges, doesn’t it? In how these things are designed, because you don’t want to put so many roadblocks into the design of a product that it becomes not useful. Or in a case where you’re tracking a child and they’ve not really reconsented that day, and all of a sudden, they’re missing, and they haven’t got the service enabled. But again, making sure that that consent is only carrying on for as long as it’s truly given. I think it’s easy enough in a shared document, if you’re using Google Documents, or whatever, to see who’s looking at that document at that time, all the icons appear, if … The avatars of all the different users who were there and have access. You thought those sorts of solutions could work equally well for when people are accessing your location?

Eva: Yeah, totally. Yeah, it does get sticky. There aren’t a lot of straightforward, easy solutions with this stuff, and the stuff about, yeah, you want to … Maybe it’s not a great idea to let your eight-year-olds give consent every single day because what if one day they’re just like, “No,” or they mistakenly say no or whatever, and then all of a sudden, you can’t find them. Yeah, that’s a real scenario. I think, with some of this stuff, it’s like I don’t think it’s going to be realistic to say, “Well, this production shouldn’t exist or you should get consent every day.”

Eva: But then in those cases, there are still things you can do like telling the person that this person, this other user can view their location even if there’s not a lot that they can do about it. At the very least giving them that information so that they clearly understand what’s happening and then can take actions to keep themselves safe if they’re in that abusive relationship, it’s going to be really useful. Maybe now they know, okay, I’m not going to take my phone with me when I leave the office during my lunch hour to see my friend who my partner doesn’t approve of because she is always very much advocating that I leave the relationship and he would know that I had gone somewhere if I bring my phone.

Eva: But if I just keep my phone at the office, then he won’t know. Being able to make those types of informed decisions. Even if you’re not able to necessarily end the location sharing, there are definitely other things that we can do that will keep users safe while still conserving the core functionality of the feature product.

Drew: Yes. It comes down to design decisions, isn’t it? And finding solutions to difficult problems, but first understanding that the problem is there and needs to be solved for, which I think is where this conversation is so important in understanding the different ways things are used. Increasingly, we have devices with microphones and cameras in them. We have plenty of surveillance cameras in our homes and on our doorbells, and covert surveillance isn’t just something from spy movies and cop shows anymore, is it?

Eva: Yeah. Oh, yeah. It’s such a huge problem. I have very strong feelings about this stuff, and I know a lot of people are really into these devices and I think that’s totally fine. I do think that they’re misused a lot for surveillance. I think a lot of spouses and family members, but also a lot of … This is where I think getting into stuff with children, to me at least, it becomes a little more clear cut that even children have some rights to privacy, and especially when you look at teenagers need a lot more independence and they need space, and there’s literally brain development stuff going on around independence.

Eva: I think there’s ways to help your kids be safe online and make good decisions, and also to sometimes check in on what they’re doing without it being something where you’re constantly watching them or constantly injecting yourself into their lives in ways that they don’t want. But yeah, the plethora of different surveillance devices is just out of control, and people are using these all the time to covertly watch each other or to not even overtly. Sometimes it’s out in the open like, “Yeah, I’m watching you. What are you going to do about it? You can’t because we’re in this relationship where I’ve chosen to use violence to keep my power and control over you.”

Eva: It becomes a really big problem. Something that I came across a lot is people … It becomes one more way for the abuser to isolate the survivor away from their support network. You can’t have a private phone conversation with your friend or your sibling or your therapist. Suddenly, there’s nowhere in your home that’s actually a private space, which has also been a really big problem during the pandemic where people are forced to be at home. We’ve seen such a huge increase in domestic violence, as well as the tech facilitated domestic violence because abusers have had more time to figure out how to do these things, and it’s a much smaller space that they have to wire up for control. A lot of people have been doing that. It’s been a really big problem.

Drew: I would expect that the makers of these sorts of products, surveillance cameras and what have you, would say, “We’re just making tools here. We don’t have any responsibility over how they’re used. We can’t do anything about that.” But would you argue that, yes, they do have a responsibility for how those tools are used?

Eva: Yeah, I would. I would, first of all, tell someone who said that, “You’re a human being first before you’re an employee at a tech company, capitalist moneymaker person. You’re a human being and your products are affecting human beings and you’re responsible for that.” The second thing I would say is that just demanding a higher level of tech literacy from our users is a really problematic mindset to have, because it’s easy for those of us who work in tech to say, “Well, people just need to learn more about it. We’re not responsible if someone doesn’t understand how our product is being used.”

Eva: But the majority of people don’t work in tech and they’re still, obviously, some really plenty of really tech savvy people out there who don’t work in tech. But demanding that people understand exactly how every single app they have, every single thing that they’re using on their phone or their laptop, every single device that they have in their homes, understanding every single feature and identifying the ways that it could be used against them, that’s such a huge burden. It might not seem like a big deal if you’re just thinking about your one product like, oh, well, of course, people should take the time to understand it.

Eva: But we’re talking about dozens of products that we’re putting the onus on people who are going through a dangerous situation to understand, which is just very unrealistic and pretty inhumane, especially considering what abuse and surveillance and these different things do to your brain if you’re constantly in a state of being threatened and in this survival mode all the time. Your brain isn’t going to be able to have full executive functioning over figuring out, looking at this app and trying to identify how is my husband using this to watch me or to control me or whatever it is. I would say that that’s really just, honestly, a crappy mindset to have and that we are responsible for how people use our products.

Drew: When you think most people don’t understand more than one or two buttons on their microwave, how can we be expected to understand the capabilities and the functioning of all the different apps and services that we come into contact with?

Eva: Absolutely. Yeah.

Drew: When it comes to designing products and services, I feel as a straight white English speaking male that I’ve got a huge blind spot through the privileged position that society affords me, and I feel very naïve and I’m aware that could lead to problematic design choices in things that I’m making. Are there steps that we can take and a process we can follow to make sure that we’re exposing those blind spots and doing our best to step outside our own realm of experience and include more scenarios?

Eva: Yes, absolutely. I have so many thoughts about this. I think there’s a couple things. First, we’re all responsible for educating ourselves about our blind spots. Everyone has blind spots. I think maybe a cis white male has more blind spots than other groups, but it’s not like there’s some group that is going to have no blind spots. Everyone has them. I think educating ourselves about the different ways that our tech can be misused. I think it’s more than … Obviously, interpersonal safety is my thing that I work on. But there’s all these other things, too, that I’m also constantly trying to learn about and figure out how do I make sure that the tech I’m working on isn’t going to perpetuate these different things.

Eva: I really like Design For Real Life by Sara Wachter-Boettcher and Eric Meyer is great for inclusive design and compassionate design. But then also I’ve been learning about algorithms and racism and sexism and different issues with algorithms. There’s so many different things that we need to consider, and I think we’re all responsible for learning about those things. Then I also think bringing in the lived experience of people who have gone through these things once you’ve identified, okay, racism is going to be an issue with this product, and we need to make sure that we’re dealing with that and trying to prevent it and definitely giving ways for people to report racism or what have you.

Eva: One of the things, the example I give in my book is Airbnb has a lot of issues with racism and racist hosts. Even just the study about if you have … If your photo is of a black person, you’re going to get denied. Your request for booking a stay are going to get denied more frequently than if you have a white person in your photo. I think me as a white person, that’s something that I don’t think I could just go and learn about and then speak as an authority on the issue. I think in that case, you need to bring in someone with that lived experience who can inform you, so hiring a black designer consultant because obviously we know there’s not great diversity actually in tech.

Eva: Ideally, you would already have people on your team who could speak to that, but I think … But then it’s so complicated. This is where it gets into do we demand that sort of labor from our teammates? That can be problematic too. The black person on your team is probably already going to be facing a lot of different things, and then to have the white people be like, “Hey, talk to me about traumatic experiences you’ve had because of your race.” We shouldn’t probably be putting that type of burden on people, unless …

Eva: Plenty of people will willingly bring that up and speak about it, and I will speak about things, my experience as a woman, but it’s maybe not something I’m wanting to do every single day. In that case, hiring people who do do that for work and then always paying people for their lived experiences and making it not exploitative in terms of actually compensating people for that knowledge and that lived experience.

Drew: Yeah. I think it really does underscore how incredibly important and beneficial is to have diverse teams working on products, bringing in all sorts of different experiences.

Eva: Yeah, absolutely.

Drew: One of the things that you cover in your book in the design process is creating abuser and survivor archetypes to help you test your features against. Could you tell us a little bit about that idea?

Eva: Yeah. This came out of wanting to have sort of persona artifact that would help people understand very clearly what is the problem. This is something that comes after the team has done research into the issue and has identified the different likely issues when it comes to interpersonal safety and can very clearly articulate what those are. Then you make the abuser archetype, which is the person who is trying to use your product for whatever the harm is, and then the survivor archetype, who is going to be the victim of that harm. The important thing about these is having the goals. It’s pretty much just like you find a picture, or you don’t even need a picture, but it just articulates what the abuse is and then the person’s goals.

Eva: If it’s someone who wants to figure out where their ex girlfriend lives now because he wants to stalk her, his goal is to stalk her. Then the survivor’s goal … Well, sorry, the abuser’s goal would be to use your product. Let’s say it’s Strava, for example, is one of the ones I use as an example in the book. I want to use Strava to track down my ex girlfriend, and then the survivor archetype is saying, “I want to keep my location secret from my ex who is trying to stalk me.” Then you can use those goals to help inform some of your design and to test your product to see is there anything about the survivor’s location data that is publicly available to someone who’s trying to find their location, even if they have enabled all of their privacy features?

Eva: I use Strava as the example because up until a few months ago, there was that ability. There was something that even if you had put everything to private, if you were running or exercising nearby someone else using the app for a certain amount of time, it’s unclear how close you have to be or how long you have to be running the same street as this other person, it’ll tag them as having appeared in your workout. That would be an example where the abuser was able to meet his goals, he was able to find his ex in this way. Then you would know, okay, we need to work against it and prevent that goal from being successful.

Drew: Especially, you can’t think up every scenario. You can’t work out what an abuser would try to do in all circumstances. But by covering some key apparent problems that could crop up, then I guess you’re closing lots of doors for other lines of abuse that you haven’t thought of.

Eva: Yes. Yeah, exactly. That brings up a really good other related point, which is that, yeah, you’re probably not going to think of everything. Then having ways for users to report issues and then being the type of team and company that can take those criticisms or issues that users identify with some grace and quickly course correcting because there’s always going to be things you don’t think about and users are going to cover all sorts of things. I feel like this always happens. Being able to have a way to get that feedback and then to quickly course correct is also a really big part of the whole process of designing for safety.

Drew: Is there a process that would help you come up with these potential problems? Say you’re designing a product that uses location data, what process would you go through to think of the different ways it could be abused? Is there anything that helps in that regard?

Eva: Yeah. This is something I get more in depth about in the book, but having some research around it first is the first thing. With location services is a pretty easy one, so to speak. There’s so many documented issues with location services. There’s also been academic studies done on this stuff, there’s lots of literature out there that could help inform the issues that you’re going to face. Then the other thing that I suggest that teams do is after doing this research is doing a brainstorm for novel of use cases that have not been covered elsewhere.

Eva: The way I usually do this is I have the team do a Black Mirror brainstorm. Let’s make a Black Mirror episode. What’s the worst, most ridiculous, just anything goes, worst case scenario for this product or feature that we’re talking about? People usually come up with some really wild stuff and it’s actually usually really fun. Then you say, “Okay, let’s dial it back. Let’s use this as inspiration for some more realistic issues that we might come across,” and then people are usually able to identify all sorts of things that their product might enable.

Drew: For people listening who feel like they would really love to champion this area of work within their organization, do you have any advice as to how they could go about doing that?

Eva: Yeah. There is a lot of stuff about this in the book, about integrating this into your practice and bringing it to your company. Advice for things like talking to a reluctant stakeholder whose only concern is, well, how much is this going to cost me? How much extra time is this going to take? Being able to give really explicit answers about things like that is really useful. Also, I have recordings of my conference talk which people usually say, “I had just had no idea that this was a thing.” You can help educate your team or your company.

Eva: I talked about this in the book too, honestly, it can be awkward and weird to bring this stuff up and just being mentally prepared for how it’s going to feel to be like, “We should talk about domestic violence,” or, “We should talk about invasive child surveillance.” It can be really hard and just weird. One of the pieces of advice I give is for people to talk to a supportive coworker ahead of time, who can back them up if you’re going to bring this up in a meeting and just help reduce the weirdness, and there are some other tactics in the book. But those are definitely the big ones.

Drew: I would normally ask at this point where our listeners should go to find out more about the topic. But I know that the answer is actually to go and read your book. We’ve only really just scratched the surface on what’s covered in Design For Safety, which is out now, this August 2021 from A Book Apart. The book, for me, it’s sometimes an uneasy read in terms of content, but it’s superbly written and it really opened my eyes to a very important topic. One thing I really like about all the A Book Apart books is they’re small and focused and they’re easy to consume. I would really recommend that listeners check out the book if the topic is interesting to them.

Eva: Yeah, thanks for that. Theinclusivesafetyproject.com is the website I have to house all of this information. There’s a lot of great resources in the back of the book for people who want to learn more. But if you just want something and more immediately, you can go to Theinclusivesafetyproject.com and there’s a resources page there that has different sort of articles or studies to look at different people working in related spaces to follow on Twitter, books to read, things like that.

Drew: Right. I’ve been learning what it means to design for safety. What have you been learning about, Eva?

Eva: I have been learning about data. I’m reading a really interesting book called Living in Data by Jer Thorp, which I thought it was going to be all about different issues with big data, which is such a big thing but it’s actually an extremely thoughtful, much more interesting approach to what it means to live in data and just how much data is taken from us every day and what’s done with it and just data out there in the world. It’s really interesting and important, and yeah, I would definitely recommend that book.

Drew: No, amazing. If you the listener would like to hear more from Eva, you can follow her on Twitter where she’s @epenzeymoog, and you can find all her work linked from her website at evapenzeymoog.com. Design For Safety is published abookapart.com and is available now. Thanks for joining us today, Eva. Do you have any parting words?

Eva: Please get vaccinated so that we can go back to normal.

Articles on Smashing Magazine — For Web Designers And Developers