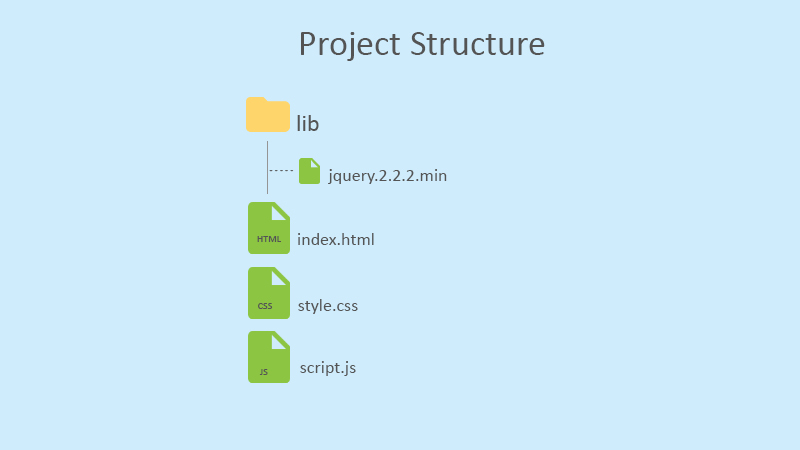

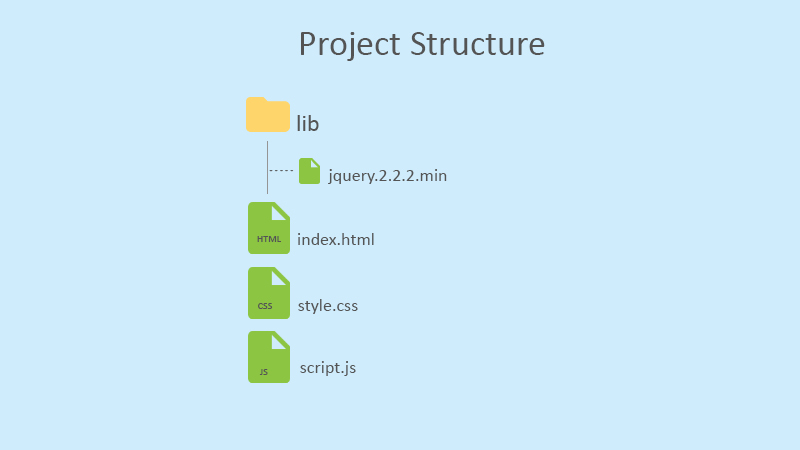

Build Live Emotions Capture App Using Emotions API (Part II)

Aloha to the last part of the series. I hope you are ready with the development environment. You know, secret Santa told me that you are very excited to jump in and build the app.

In this article we will take a look at HTML5’s cool features such as getUserMedia(), canvas and converting canvas paint into BLOB and for the sake of simplicity and lucid writing, I have divided the code into a small chunk.

Basic HTML markup (index.html)

<!DOCTYPE html> <html> <head> <link href="style.css" rel="stylesheet" type="text/css" /> <title>Emotions API Demo</title> </head> <body> ... ... ... <video width="70%" autoplay></video> <canvas id="canvas" width="200" height="200"></canvas> <span id="capture-button">Capture</span> <span id="use-this">Use this</span> ... ... ... <script src="lib/jquery-2.1.4.js"></script> <script src="script.js"></script> </body> </html>

Basic CSS styling (style.css)

video, canvas { background: #f9f9f9; border: solid 1px #f1f1f1; border-radius: 3px; margin: 20px 0; } span { display: inline-block; background: tomato; padding: 10px; margin: 20px 0; color: #fff; border-radius: 3px; cursor: pointer; -webkit-user-select: none; -moz-user-select: none; }

JavaScript (script.js)

1. getUserMedia() method

The first task is to check getUserMedia() method in various browsers and normalize it by storing it in the navigator.getUserMedia.

// check the various vendor prefixed versions of getUserMedia. navigator.getUserMedia = (navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia);

The second task is to check whether it is supported on that browser or not. For this, we will use a simple if-else condition. If it is supported then we will continue, otherwise an alert message will appear. As per the MDN, the browser compatibility with getUserMedia() is good with the vendor prefix.

// Check that the browser supports getUserMedia. // If it doesn't show an alert, otherwise continue. if (navigator.getUserMedia) { //code will go here } else { alert('Sorry, your browser does not support getUserMedia'); }

Note: At the time of developing the app, this feature was deprecated. Unfortunately, now this method has been removed from the Web standards and an experimental technology will soon replace it. It’s called MediaDevices.getUserMedia(). Read more about MediaDevices.getUserMedia() on Mozilla Developer Network.

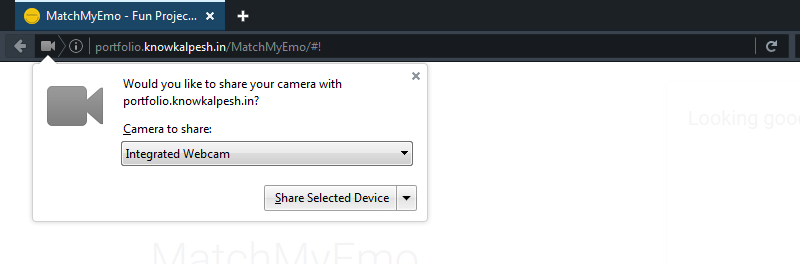

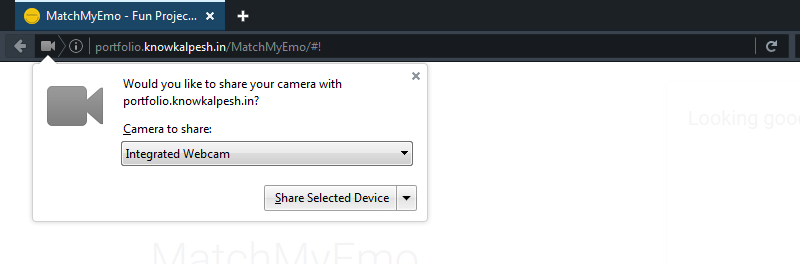

2. Request the camera

Once we have checked support for the getUserMedia() method, the very next thing is to request permission from the user.

The standard syntax is navigator.getUserMedia(constraints, successCallback, errorCallback);

2.1 constraints

It is a type of object where you specify the types of media to request such as video, audio, etc. along with a few parameters based on your requirements.

We won’t pass any parameter to our media. So, our syntax will be like this –

navigator.getUserMedia( { video: true }, successCallback, errorCallback );

This piece of code will ask the user to provide access to their web camera. If the user grants access to web camera then a successCallback will execute otherwise errorCallback which is unfortunate as our app will be handicapped.

2.2 successCallback

Success callback is always accompanied by a parameter that we can use to create live video streaming by creating object URL using createObjectURL method.

function (localMediaStream) { var video = document.querySelector('video'); video.src = window.URL.createObjectURL(localMediaStream); //more code will be here },

At this point in time, you can see a live video streaming in your browser. Say hello to yourself there!

The next thing is to paint the captured image on a canvas. For this, we will leverage the HTML5 canvas.

At this point in time, we will set few global variables that we can use in subsequent code snippets.

//global vars var dataURL, context, canvas;

Let’s create a canvas now.

canvas = document.getElementById("canvas"); context = canvas.getContext("2d");

The below snippet will take the current frame from the video and paint it on our recently drawn canvas.

document.getElementById("capture-button").addEventListener("click", function () { context.drawImage(video, 20, 20, 600, 450, 0, 0, 200, 200); });

As I mentioned in the first article, we won’t store users captured images on our server and will carry the whole operation at client-side. I delivered my promise. We will make use of a function that will create our canvas to a Binary Large Object (BLOB). This function is taken from Eric Bidelman’s one of the projects on GitHub. This function is only for personal use and demo purposes.

Before we use this function, let’s give the user the capability to choose whether they want to process the captured photo or want to take another. (This is a must have functionality in today’s photosensitive world where people want to try many photos before processing).

document.getElementById("use-this").addEventListener("click", function () { dataURL = canvas.toDataURL("image/png"); //function to create BLOB will be here }

The above code snippet will convert our canvas image to Data URL. Let’s pass this variable to our magical function.

makeblob = function (dataURL) { var BASE64_MARKER = ';base64,'; if (dataURL.indexOf(BASE64_MARKER) == -1) { var parts = dataURL.split(','); var contentType = parts[0].split(':')[1]; var raw = decodeURIComponent(parts[1]); return new Blob([raw], { type: contentType }); } var parts = dataURL.split(BASE64_MARKER); var contentType = parts[0].split(':')[1]; var raw = window.atob(parts[1]); var rawLength = raw.length; var uInt8Array = new Uint8Array(rawLength); for (var i = 0; i < rawLength; ++i) { uInt8Array[i] = raw.charCodeAt(i); } return new Blob([uInt8Array], { type: contentType }); }

This function will return BLOB that we can pass to our AJAX call to get the result. Before, we make an AJAX call to the API server; we will need to store our API key in a variable.

var emotion_api_key = "abcdefghijklmnopqrstuvwzyz1234"; //replace this with your API key

Now, we are set to send the image of the user to get emotions result. Let’s crack a few more pieces of ice.

$ .ajax({ url: "https://api.projectoxford.ai/emotion/v1.0/recognize", beforeSend: function(xhrObj) { // Request headers xhrObj.setRequestHeader("Content-Type", "application/octet-stream"); xhrObj.setRequestHeader("Ocp-Apim-Subscription-Key", emotion_api_key); }, type: "POST", // Request body data: makeblob(dataURL), processData: false, success: function(data) { var result = data; //code to show result will be here } }) .fail(function(data) { alert("Code: " + data.responseJSON.error.code + " Message:" + data.responseJSON.error.message); }); });

The above code skeleton can be found on the Project Oxford site under API reference of Emotions API. Please note that we have altered a few things in the skeleton to work in our case.

From the above code, we will get a JSON object. This object is stored in the result variable. Now, you have all the power to display them on the page.

With this, we have covered successCallback. Let’s have a look at errorCallback.

2.3 errorCallback

// Error Callback function (err) { // Log the error to the console. console.log('The following error occurred when trying to use getUserMedia: ' + err); }

With this, we have completed whole if part. Let’s add an error message in the else part and call it good.

else { alert('Sorry, your browser does not support getUserMedia');

Working demo

I have already developed this project with a fancy UI and other functionality. You can visit MatchMyEmo project and explore the world of possibilities with Project Oxford.

As I already outlined in my last article, Google Chrome supports getUserMedia() over secure connections. So this project will work only in Firefox.

Conclusion

MatchMyEmo is just a standalone small app for fun purposes. There are innumerable possibilities where you can use Artificial Intelligence APIs in your app. Some of the fascinating apps are already there in Microsoft’s App Gallery. Though these technologies are still in their beta phase and lack accuracy, it is a good time to learn them.

I hope you have enjoyed this series. Feel free to leave a comment about the project and what your plan is for building an app on top of Project Oxford APIs?