Better Collaboration By Bringing Designers Into The Code Review Process

Better Collaboration By Bringing Designers Into The Code Review Process

Ida Aalen

Smooth collaboration between developers and designers is something everyone aspires to, but it’s notoriously difficult. But with today’s advanced web, it’s difficult — if not impossible — to build a truly great product without collaborating across disciplines. Because of the range of technologies required to build a product, the product can only truly succeed when all disciplines — developers and designers, content creators, and user experience strategists — are deeply involved from the early stages of the project. When this happens, all ends of what it takes to build a product come naturally together into a unified whole, and a thus great product.

Because of this, no one is really promoting waterfall processes anymore. Nevertheless, involving other people early on, especially people from other disciplines, can feel scary. In the worst case scenario, it leads to “design by committee.”

Moreover, both designers and content strategists often have backgrounds in fields in which a sole creative genius is still the ideal. Having someone else proof your work can feel like a threat to your creativity.

So how can you involve people early on so that you’re avoiding the waterfall, but also making sure that you’re not setting yourself up for design by committee? I found my answer when learning about code reviews.

The Aha! Moment

In July 2017, I founded Confrere together with two developers, and we quickly hired our first engineer (I’m not a developer myself, I’m more of a UX or content designer). Our collaboration was running surprisingly smoothly, so much so that at our retrospectives, the recurring theme was that we all felt that we were “doing it right.”

I sat down with my colleagues to try to pinpoint what exactly it was that we were “doing right” so that we could try to preserve that feeling even as our company grew and our team expanded. We came to the realization that we all appreciated that the whole team was involved early on and that we were being honest and clear in our feedback to each other. Our CTO Dag-Inge added: “It works because we’re doing it as peers. You’re not being berated and just getting a list of faults”.

The word “peer” is what gave me the aha moment. I realized that those of us working within UX, design, and content have a lot to learn from developers when it comes to collaboration.

Peer reviewing in the form of code reviews is essential to how software gets built. To me, code reviews offer inspiration for improving collaboration within our own fields, but also a model for collaborating across fields and disciplines.

If you’re already familiar with code reviews, feel free to skip the next section.

What Is A Code Review?

A code review can be done in various ways. Today, the most typical form of code review happens in the way of so-called pull requests (using a technology called git). As illustrated below, the pull requests let other people on the team know that a developer has completed code that they wish to merge with the main code base. It also allows the team to review the code: they give feedback on the code before it gets merged, in case it needs improvement.

Pull requests have clearly defined roles: there is an author and a reviewer(s).

As an example, let’s say our senior engineer Ingvild has made a change to Confrere’s sign-up flow. Before it is merged into the main code base and gets shipped, she (the author) creates a pull request to request a review from our CTO Dag-Inge (the reviewer). He won’t make any changes to her code, only add his comments.

It’s up to Ingvild how she wants to act on the feedback she received in the review. She’ll update her pull request with the changes she sees fit.

When the reviewer(s) approve the pull request, Ingvild can then merge her changes with the main code base.

Why Bother Doing Code Review?

If you’ve never done code review, the process above might sound bureaucratic. If you have doubts, here’s a ton of blog posts and academic research about the advantages of code review.

Code reviews set the tone for the entire company that everything we do should be open to scrutiny from others, and that such scrutiny should be a welcome part of your workflow rather than viewed as threatening.

Code review reduces risk. Having someone proof your work, and also knowing someone will proof your work, helps weed out errors and heightens quality. In addition, it ensures consistency and helps every team member familiarize with more of the code base.

When done right, code review also builds a culture for collaboration and openness. Trying to understand and critique other people’s work is an excellent way to learn, and so is getting honest feedback on your work.

Always having at least two people look over the code also curtails ideas of “my” code and “your” code. It’s our code.

Considering these advantages, a review shouldn’t just be for code.

Review Principles For All Disciplines, Not Just Code

With reviews, there is always one author and one or more reviewers. That means you can involve people early on without falling into design by committee.

First, I have to mention two important factors which will affect your team’s ability to do beneficial reviews. You don’t necessarily have to have mastered them, but as a minimum, you should aspire to the following:

- You and your colleagues respect each other and each other’s disciplines.

- You’re sufficiently self-assured in your own role so that you feel like you can both give and receive criticism (this is also connected to the team’s psychological safety).

Even if we’re not reviewing code, there’s a lot to learn from existing best practices for code reviews.

Within our team, we try to adhere to the following principles when doing reviews:

- Critique the work, not the author.

- Be critical, but remain affable and curious.

- Differentiate between a) Suggestions b) Requirements, c) Points that need discussion or clarification.

- Move discussions from text to face-to-face. (Video counts)

- Don’t forget to praise the good parts! What’s clever, creative, solid, original, funny, nice, and so on?

These principles weren’t actually written down until after we discussed why our collaboration was working so well. We all felt we were allowed to and expected to ask questions and suggest improvements already, and that our motivations were always about building something great together, and not about criticising another person.

Because we were being clear about what kind of feedback we were giving, and also remembered to praise each other’s good work, doing reviews was a positive force rather than a demotivating one.

An Example

To give you an idea of how our team uses review across disciplines and throughout a process, let’s look at how the different members of our team switched between the roles of author and reviewer when we created our sign-up flow.

Step 1: Requirements gathering

Author: Ida (UX)

Reviewers: Svein (strategy), Dag-Inge (engineering), Ingvild (engineering).

Whiteboard sessions can be exhausting if there’s no structure to them. To maintain productivity and creativity, we use the author/reviewer structure, even for something as seemingly basic as brainstorming on a whiteboard. In this case, in which we were coming up with the requirements for our sign-up flow, I got to be the author, and the rest of the team gave their feedback and acted as reviewers. Because they also knew they’d be able to review what I came up with in step 2 (plenty more opportunity for adjustments, suggestions, and improvements), we worked swiftly and were able to agree upon the requirements in under 2 hours.

Step 2: Mockup with microcopy

Author: Ida (UX)

Reviewers: Ingvild (engineering), Eivind (design), Svein (strategy).

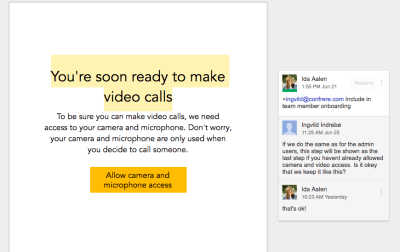

As an author, I created a mockup of the sign-up flow with microcopy. Did the sign-up flow make sense, from both the user and engineering perspective? And how could we improve the flow from a design and frontend perspective? At this stage, it was essential to work in a format in which it would be easy for all disciplines to give feedback (we opted for Google Docs, but it could also have been done with a tool like InvisionApp).

Step 3: Implementing the sign-up flow

Author: Ingvild (engineering)

Reviewer: Ida (UX) and Dag-Inge (engineering).

We had agreed upon the flow, the input fields, and the microcopy, and so it was up to Ingvild to implement it. Thanks to Surge, we can automatically create preview URLs of the changes so that people who can’t read code are able to give feedback at this stage as well.

Step 4: User testing

Author: Ida (UX)

Reviewer: The users.

Yes, we consider user testing a form of review. We brought our newly built sign-up flow face-to-face with actual users. This step gave us a ton of insight, and the most significant changes in our sign-up flow came as a result.

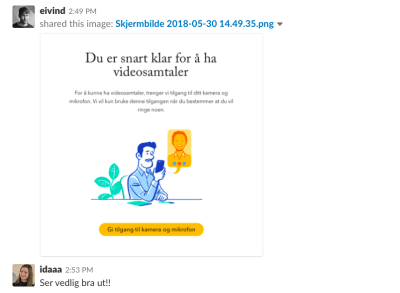

Step 5: Design

Author: Eivind (design)

Reviewers: Ingvild (engineering) and Ida (UX).

When design suddenly shows up here in step 5, it might look a lot like a waterfall process. However, our designer Eivind had already been involved as a reviewer since step 2. He gave a bunch of useful feedback at that stage and was also able to start thinking about how we could improve the design of the sign-up flow beyond the existing modules in our design system. At this step, Eivind could also help solve some of the issues that we identified in the user testing.

Step 6: Implementation

Author: Ingvild (engineering)

Reviewer: Eivind (design), Ida (UX) and Dag-Inge (engineering).

And then we’re back to implementing.

Why review works

In summary, there’s always just one author, thus avoiding design by committee. By involving a range of disciplines as reviewers early on, we avoid having a waterfall process.

People can flag their concerns early and also start thinking about how they can contribute later on. The clearly defined roles keep the process on track.

Regular Review Walkthroughs

Taking inspiration from code walkthroughs, we also do regular review walkthroughs with different foci, guided by the following principles:

- The walkthrough is done together.

- One person is in charge of reviewing and documenting.

- The idea is to identify issues, not necessarily to solve them.

- Choose a format that gives as much context as possible, so that it’s easy to act upon the findings later (e.g. InvisionApp for visual reviews, Google Docs for text, and so on).

We’ve done review walkthroughs for things such as accessibility audits, reviewing feature requests, auditing the implementation of the design, and doing heuristic usability evaluations.

When we do our quarterly accessibility reviews, our accessibility consultant Joakim first goes through the interface and documents and prioritizes the issues he’s found in a shared Google Sheet. Joakim then walks us through the most important issues he’s identified.

Meeting face-to-face (or at least on video) to go through the issues helps create an environment for learning rather than a feeling of being supervised or micromanaged.

If you find yourself always being tied up with something that’s due for release, or fixing whatever is at the top of your inbox, reviews can help remedy that. If you set aside regular half days for reviewing work you’ve already done, you can identify issues before they become urgent. It can also help you refocus and make sure you’re priorities are keeping along the right lines. Your team should maybe not begin building that new feature before you’re confident that the existing features are living up to your standards.

User Testing Is A Form Of Review

An important motivation for code reviews is to reduce risk. By doing it every single time you introduce a change or add something new to your product, and not just when you suspect something is maybe not up to par, you diminish the chance of shipping bugs or subpar features. I believe we should look at user testing from the same perspective.

You see, if you want to reduce the risk of shipping with major usability issues, user testing has to be part of your process. Just having your UX designers review the interface isn’t enough. Several studies have found that even usability experts fail in identifying every actual usability problems. On average, 1 in 3 issues identified by experts were false alarms — they weren’t issues for users in practice. But worse, 1 in 2 issues that users did in fact have, were overlooked by the experts.

Skipping user testing is just as big a risk as skipping code review.

Does Review Mean Death To Creativity?

People working within design, user experience, and content often have educational backgrounds from art schools or maybe literature, in which the sole creator or creative artistic genius is hailed as the ideal. If you go back in history, this used to be the case for developers as well. Over time, this has changed by necessity as web development has grown more complex.

If you cling to the idea of creativity coming from somewhere deep within yourself, the idea of review might feel threatening or scary. Someone meddling in your half-finished work? Ouch. But if you think about creativity as something that can spring from many sources, including dialogue, collaboration, or any form of inspiration (whether from the outside or from someplace within you), then a review is only an asset and an opportunity.

As long as we’re building something for the web, there’s no way around collaborating with other people, be it within our own field or others. And a good idea will survive review.

Let’s create something great together.

(rb, ra, yk, il)

(rb, ra, yk, il)

Articles on Smashing Magazine — For Web Designers And Developers